HBM-on-GPU: Powering the Next Wave of AI Accelerators – A Specialised Future, Not for Consumer Graphics Cards

The Future of AI Acceleration: Imec’s Groundbreaking 3D HBM-on-GPU Research

The relentless demand for artificial intelligence capabilities continues to push the boundaries of computing. As AI models grow exponentially in complexity and size, the need for faster, more efficient processing and memory solutions becomes paramount. This quest for advanced performance is driving innovative research into novel chip architectures, particularly for specialised AI accelerators that power data centres worldwide.

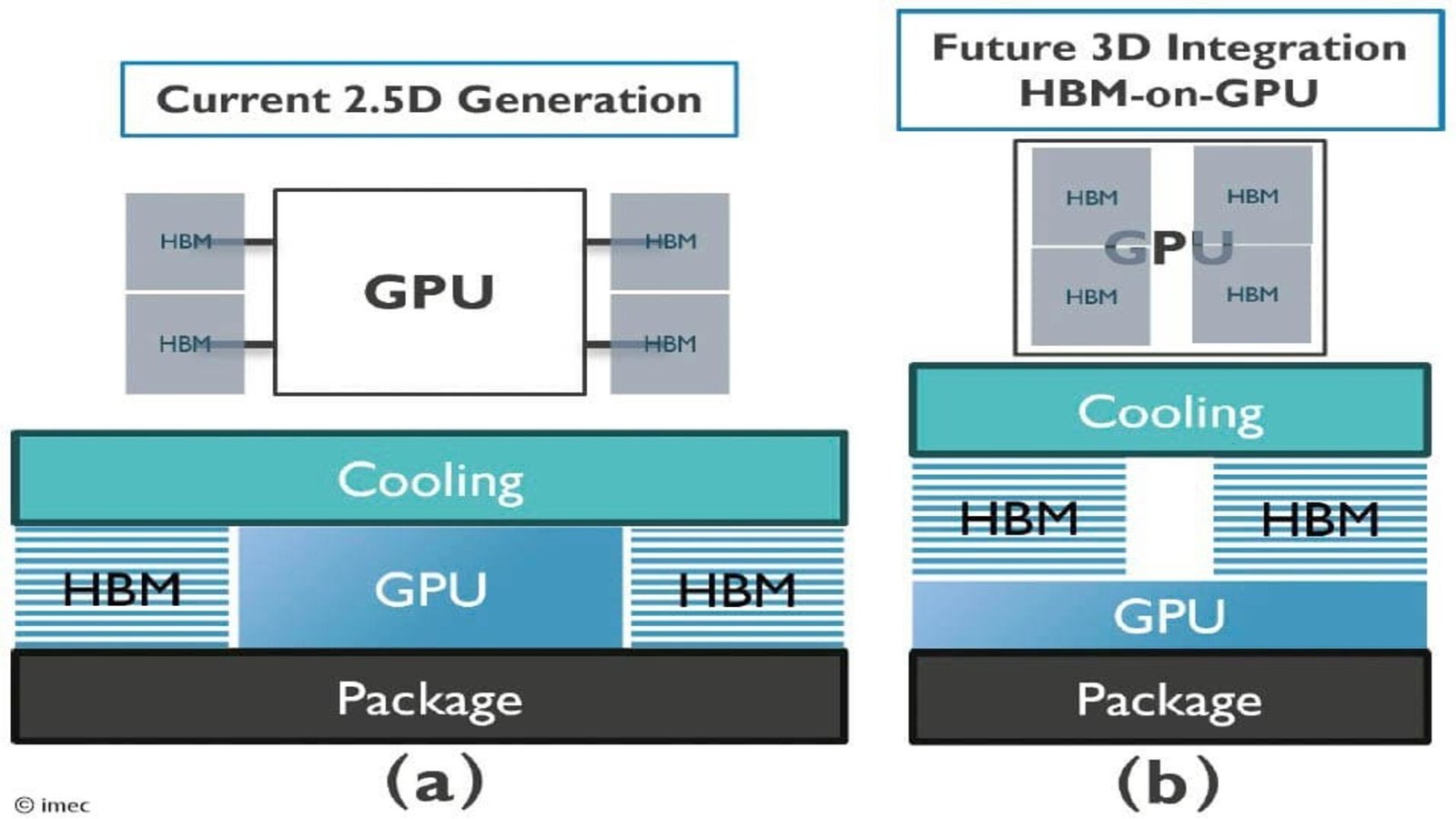

At the forefront of this innovation is Imec, a renowned research and innovation hub in nanoelectronics and digital technologies. Their recent groundbreaking study into 3D High-Bandwidth Memory (HBM) integrated directly onto Graphics Processing Units (GPUs) represents a significant leap forward. This advanced approach promises to revolutionise how AI workloads are handled, addressing critical bottlenecks in current systems.

High-Bandwidth Memory (HBM) is already a pivotal component in high-performance computing, known for its ability to stack multiple memory dies vertically. This vertical stacking dramatically increases bandwidth compared to traditional flat memory layouts. By integrating HBM in a 3D configuration directly onto the GPU package, the potential for data transfer speed and memory density reaches unprecedented levels, essential for next-generation AI.

Imec’s research specifically delves into the extreme density potential offered by this HBM-on-GPU architecture. Imagine a scenario where the processing core has immediate access to vast amounts of data without the traditional latency associated with external memory. This direct, high-speed access is critical for AI applications like deep learning, where vast datasets are constantly being processed and models frequently updated.

The benefits for AI accelerators are clear: superior data throughput enables faster training times for complex neural networks and more efficient inference execution. This translates directly into quicker development cycles for AI solutions and more responsive, powerful AI services. Such advancements are crucial for maintaining momentum in the rapidly evolving fields of machine learning and data analytics, driving new discoveries.

However, pioneering new technologies rarely comes without significant hurdles, and Imec’s study meticulously highlights these challenges. The sheer density and close proximity of components in a 3D HBM-on-GPU setup lead to profound thermal management issues. Packing more processing power and memory into a smaller volume inevitably generates immense heat, which must be dissipated effectively to ensure stable operation and longevity.

Addressing these thermal challenges requires sophisticated cooling solutions that go far beyond what is currently available in commercial products. Research focuses on innovative heat sinks, microfluidic cooling, and advanced thermal interface materials to manage the extreme temperatures generated. The efficiency of these cooling systems will be a determining factor in the practical viability of 3D HBM-on-GPU designs for widespread adoption in AI infrastructure.

Beyond thermal concerns, the study also points to complex performance challenges relating to power delivery and signal integrity. Delivering stable power to densely packed, high-speed components within a 3D stack is inherently difficult. Maintaining signal quality and preventing interference across multiple layers of interconnected chips requires meticulous design and advanced manufacturing techniques to avoid data corruption and performance degradation.

The intricate interconnects between the HBM stacks and the GPU core demand ultra-reliable and low-latency pathways. Even minor imperfections in these connections can significantly impact overall performance and yield. Imec’s work is crucial in identifying these potential pitfalls early, allowing for focused research into robust design methodologies and advanced packaging technologies to overcome them, ensuring data integrity.

It’s vital for consumers to understand that while this technology promises a revolution for AI, it is not destined for their personal video cards anytime soon. The complexity, specialised manufacturing processes, and inherent high costs associated with 3D HBM-on-GPU designs make them prohibitive for the mass consumer market. This advanced hardware is engineered specifically for demanding enterprise and research applications.

Consumer graphics cards are designed for a different set of priorities, balancing performance with affordability, power consumption, and cooling requirements suitable for a home environment. The cutting-edge solutions explored by Imec are tailor-made for high-performance computing environments where maximum computational density and throughput are valued above all else, regardless of the significant investment required.

Therefore, while the advancements from Imec’s research will undoubtedly reshape the landscape of artificial intelligence, their direct impact will primarily be felt within data centres and specialised AI hardware. These innovations will enable the next generation of AI models to be trained faster and deployed more efficiently, unlocking unprecedented capabilities in various sectors from healthcare to autonomous systems.

In conclusion, Imec’s 3D HBM-on-GPU study is a testament to the ongoing innovation in semiconductor technology, highlighting both the immense potential for extreme density in AI accelerators and the significant engineering hurdles that must be overcome. This research paves the way for a future where AI systems are more powerful and efficient than ever imagined, cementing the UK’s position at the forefront of technological advancement.

Also Read: OpenAI’s Bold Move: GPT-5.2 Set to Challenge Gemini 3 in the AI Arena